Kernel Community Drafts a Plan For Replacing Linus Torvalds

The Linux kernel community has formalized a continuity plan for the day Linus Torvalds eventually steps aside, defining how the process would work to replace him as the top-level maintainer. ZDNet’s Steven Vaughan-Nichols reports: The new “plan for a plan,” drafted by longtime kernel contributor Dan Williams, was discussed at the latest Linux Kernel Maint … ⌘ Read more

DOT Plans To Use Google Gemini AI To Write Regulations

The Trump administration is planning to use AI to write federal transportation regulations, ProPublica reported on Monday, citing the U.S. Department of Transportation records and interviews with six agency staffers. From the report: The plan was presented to DOT staff last month at a demonstration of AI’s “potential to revolutionize the way we draft rulemakings,” a … ⌘ Read more

Fedora Games Lab Approved To Switch To KDE Plasma, Become A Better Linux Gaming Showcase

Back in December we reported on drafted plans for revitalizing Fedora Games Lab to be a modern Linux gaming showcase. This Fedora Labs initiative has featured some open-source games paired with an Xfce desktop while moving forward they are looking to better position it as a modern Linux gaming showcase… ⌘ Read more

France Targets Australia-Style Social Media Ban For Children Next Year

An anonymous reader quotes a report from the Guardian: France intends to follow Australia and ban social media platforms for children from the start of the 2026 academic year. A draft bill preventing under-15s from using social media will be submitted for legal checks and is expected to be debated in parliament early in the new year. … ⌘ Read more

China Drafts World’s Strictest Rules To End AI-Encouraged Suicide, Violence

An anonymous reader quotes a report from Ars Technica: China drafted landmark rules to stop AI chatbots from emotionally manipulating users, including what could become the strictest policy worldwide intended to prevent AI-supported suicides, self-harm, and violence. China’s Cyberspace Administration proposed the rules on Saturday. … ⌘ Read more

North Melbourne still confident of landing former AFLW number one pick

North Melbourne have been unable to trade for Kristie-Lee Weston-Turner but the two-time defending AFLW premiers are still confident they’ll secure the former number one draft pick. ⌘ Read more

Bulldogs’ top AFLW draft pick wants to join Kangaroos

The Kangaroos’ domination of the AFLW is set to be given another boost, with a former number one draft pick confirming she wants to head to North. ⌘ Read more

Putin says US-Ukraine text could form basis for peace agreement

The US and Ukraine are set to hold further talks about a draft peace deal to bring to an end the conflict. ⌘ Read more

All my newly added test cases failed, that movq thankfully provided in https://git.mills.io/yarnsocial/twtxt.dev/pulls/28#issuecomment-20801 for the draft of the twt hash v2 extension. The first error was easy to see in the diff. The hashes were way too long. You’ve already guessed it, I had cut the hash from the twelfth character towards the end instead of taking the first twelve characters: hash[12:] instead of hash[:12].

After fixing this rookie mistake, the tests still all failed. Hmmm. Did I still cut the wrong twelve characters? :-? I even checked the Go reference implementation in the document itself. But it read basically the same as mine. Strange, what the heck is going on here?

Turns out that my vim replacements to transform the Python code into Go code butchered all the URLs. ;-) The order of operations matters. I first replaced the equals with colons for the subtest struct fields and then wanted to transform the RFC 3339 timestamp strings to time.Date(…) calls. So, I replaced the colons in the time with commas and spaces. Hence, my URLs then also all read https, //example.com/twtxt.txt.

But that was it. All test green. \o/

White House Prepares Executive Order To Block State AI Laws

An anonymous reader quotes a report from Politico: The White House is preparing to issue an executive order as soon as Friday that tells the Department of Justice and other federal agencies to prevent states from regulating artificial intelligence, according to four people familiar with the matter and a leaked draft of the order obtained by POLITICO. The dr … ⌘ Read more

Spot the difference: Leaked WA gas report changed before it was tabled in parliament

The report was tabled in parliament on Tuesday afternoon after the draft version, meant to be a confidential cabinet document, was leaked. But there are notable changes. ⌘ Read more

Plan for children to face life sentences draws wave of condemnation

Premier Jacinta Allan revealed that complex legislation was still being drafted, with a bill to be introduced to parliament by the end of this year. ⌘ Read more

@prologic@twtxt.net Let’s go through it one by one. Here’s a wall of text that took me over 1.5 hours to write.

The criticism of AI as untrustworthy is a problem of misapplication, not capability.This section says AI should not be treated as an authority. This is actually just what I said, except the AI phrased/framed it like it was a counter-argument.

The AI also said that users must develop “AI literacy”, again phrasing/framing it like a counter-argument. Well, that is also just what I said. I said you should treat AI output like a random blog and you should verify the sources, yadda yadda. That is “AI literacy”, isn’t it?

My text went one step further, though: I said that when you take this requirement of “AI literacy” into account, you basically end up with a fancy search engine, with extra overhead that costs time. The AI missed/ignored this in its reply.

Okay, so, the AI also said that you should use AI tools just for drafting and brainstorming. Granted, a very rough draft of something will probably be doable. But then you have to diligently verify every little detail of this draft – okay, fine, a draft is a draft, it’s fine if it contains errors. The thing is, though, that you really must do this verification. And I claim that many people will not do it, because AI outputs look sooooo convincing, they don’t feel like a draft that needs editing.

Can you, as an expert, still use an AI draft as a basis/foundation? Yeah, probably. But here’s the kicker: You did not create that draft. You were not involved in the “thought process” behind it. When you, a human being, make a draft, you often think something like: “Okay, I want to draw a picture of a landscape and there’s going to be a little house, but for now, I’ll just put in a rough sketch of the house and add the details later.” You are aware of what you left out. When the AI did the draft, you are not aware of what’s missing – even more so when every AI output already looks like a final product. For me, personally, this makes it much harder and slower to verify such a draft, and I mentioned this in my text.

Skill Erosion vs. Skill EvolutionYou, @prologic@twtxt.net, also mentioned this in your car tyre example.

In my text, I gave two analogies: The gym analogy and the Google Translate analogy. Your car tyre example falls in the same category, but Gemini’s calculator example is different (and, again, gaslight-y, see below).

What I meant in my text: A person wants to be a programmer. To me, a programmer is a person who writes code, understands code, maintains code, writes documentation, and so on. In your example, a person who changes a car tyre would be a mechanic. Now, if you use AI to write the code and documentation for you, are you still a programmer? If you have no understanding of said code, are you a programmer? A person who does not know how to change a car tyre, is that still a mechanic?

No, you’re something else. You should not be hired as a programmer or a mechanic.

Yes, that is “skill evolution” – which is pretty much my point! But the AI framed it like a counter-argument. It didn’t understand my text.

(But what if that’s our future? What if all programming will look like that in some years? I claim: It’s not possible. If you don’t know how to program, then you don’t know how to read/understand code written by an AI. You are something else, but you’re not a programmer. It might be valid to be something else – but that wasn’t my point, my point was that you’re not a bloody programmer.)

Gemini’s calculator example is garbage, I think. Crunching numbers and doing mathematics (i.e., “complex problem-solving”) are two different things. Just because you now have a calculator, doesn’t mean it’ll free you up to do mathematical proofs or whatever.

What would have worked is this: Let’s say you’re an accountant and you sum up spendings. Without a calculator, this takes a lot of time and is error prone. But when you have one, you can work faster. But once again, there’s a little gaslight-y detail: A calculator is correct. Yes, it could have “bugs” (hello Intel FDIV), but its design actually properly calculates numbers. AI, on the other hand, does not understand a thing (our current AI, that is), it’s just a statistical model. So, this modified example (“accountant with a calculator”) would actually have to be phrased like this: Suppose there’s an accountant and you give her a magic box that spits out the correct result in, what, I don’t know, 70-90% of the time. The accountant couldn’t rely on this box now, could she? She’d either have to double-check everything or accept possibly wrong results. And that is how I feel like when I work with AI tools.

Gemini has no idea that its calculator example doesn’t make sense. It just spits out some generic “argument” that it picked up on some website.

3. The Technical and Legal Perspective (Scraping and Copyright)The AI makes two points here. The first one, I might actually agree with (“bad bot behavior is not the fault of AI itself”).

The second point is, once again, gaslighting, because it is phrased/framed like a counter-argument. It implies that I said something which I didn’t. Like the AI, I said that you would have to adjust the copyright law! At the same time, the AI answer didn’t even question whether it’s okay to break the current law or not. It just said “lol yeah, change the laws”. (I wonder in what way the laws would have to be changed in the AI’s “opinion”, because some of these changes could kill some business opportunities – or the laws would have to have special AI clauses that only benefit the AI techbros. But I digress, that wasn’t part of Gemini’s answer.)

tl;drExcept for one point, I don’t accept any of Gemini’s “criticism”. It didn’t pick up on lots of details, ignored arguments, and I can just instinctively tell that this thing does not understand anything it wrote (which is correct, it’s just a statistical model).

And it framed everything like a counter-argument, while actually repeating what I said. That’s gaslighting: When Alice says “the sky is blue” and Bob replies with “why do you say the sky is purple?!”

But it sure looks convincing, doesn’t it?

Never againThis took so much of my time. I won’t do this again. 😂

@movq@www.uninformativ.de Gemini liked your opinion very much. Here is how it countered:

1. The User Perspective (Untrustworthiness)The criticism of AI as untrustworthy is a problem of misapplication, not capability.

- AI as a Force Multiplier: AI should be treated as a high-speed drafting and brainstorming tool, not an authority. For experts, it offers an immense speed gain, shifting the work from slow manual creation to fast critical editing and verification.

- The Rise of AI Literacy: Users must develop a new skill—AI literacy—to critically evaluate and verify AI’s probabilistic output. This skill, along with improving citation features in AI tools, mitigates the “gaslighting” effect.

The fear of skill loss is based on a misunderstanding of how technology changes the nature of work; it’s skill evolution, not erosion.

- Shifting Focus to High-Level Skills: Just as the calculator shifted focus from manual math to complex problem-solving, AI shifts the focus from writing boilerplate code to architectural design and prompt engineering. It handles repetitive tasks, freeing humans for creative and complex challenges.

- Accessibility and Empowerment: AI serves as a powerful democratizing tool, offering personalized tutoring and automation to people who lack deep expertise. While dependency is a risk, this accessibility empowers a wider segment of the population previously limited by skill barriers.

The legal and technical flaws are issues of governance and ethical practice, not reasons to reject the core technology.

- Need for Better Bot Governance: Destructive scraping is a failure of ethical web behavior and can be solved with better bot identification, rate limits, and protocols (like enhanced

robots.txt). The solution is to demand digital citizenship from AI companies, not to stop AI development.

Advanced Documentation Retrieval on FreeBSD

I thought it might be nice to repost this considering the date.

When I originally wrote this I was planning an interview with Michael W. Lucas and at some point “leaked” this draft article to him. After about a day I got the email equivalent of a spit take and a ton of laughter.

Enjoy!

Russia Moves to Year-Round Military Draft Amid Wartime Manpower Needs ⌘ Read more

Turkish government drafts anti-LGBTQ+ laws threatening prison for trans people and same-sex couples ⌘ Read more

How to Add MCP Servers to Claude Code with Docker MCP Toolkit

AI coding assistants have evolved from simple autocomplete tools into full development partners. Yet even the best of them, like Claude Code, can’t act directly on your environment. Claude Code can suggest a database query, but can’t run it. It can draft a GitHub issue, but can’t create it. It can write a Slack message,… ⌘ Read more

Pretty happy with my zs-blog-template starter kit for creating and maintaining your own blog using zs 👌 Demo of what the starter kit looks like here – Basic features include:

- Clean layout & typography

- Chroma code highlighting (aligned to your site palette)

- Accessible copy-code button

- “On this page” collapsible TOC

- RSS, sitemap, robots

- Archives, tags, tag cloud

- Draft support (hidden from lists/feeds)

- Open Graph (OG) & Twitter card meta (default image + per-post overrides)

- Ready-to-use 404 page

As well as custom routes (redirects, rewrites, etc) to support canonical URLs or redirecting old URLs as well as new zs external command capability itself that now lets you do things like:

$ zs newpost

to help kick-start the creation of a new post with all the right “stuff”™ ready to go and then pop open your $EEDITOR 🤞

TNO Threading (draft):

Each origin feed numbers new threads (tno:N). Replies carry both (tno:N) and (ofeed:<origin-url>). Thread identity = (ofeed, tno).

- Roots:

(tno:N)(implicitofeed=self).

- Replies:

(tno:N) (ofeed:<url>).

- Clients: increment

tnolocally for new threads, copy tags on reply.

- Subjects optional, not required.

…

@lyse@lyse.isobeef.org True, at least old versions of KDE had icons:

https://movq.de/v/0e4af6fea1/s.png

GNOME, on the other hand, didn’t, at least to my old screenshots from 2007:

https://www.uninformativ.de/desktop/2007%2D05%2D25%2D%2Dgnome2%2Dlaptop.png

I switched to Linux in 2007 and no window manager I used since then had icons, apparently. Crazy. An icon-less existence for 18 years. (But yeah, everything is keyboard-driven here as well and there are no buttons here, either.)

Anyway, my draft is making progress:

https://movq.de/v/5b7767f245/s.png

I do like this look. 😊

I was drafting support for showing “application icons” in my window manager, i.e. the Firefox icon in the titlebar:

https://movq.de/v/0034cc1384/s.png

Then I realized: Wait a minute, lots of applications don’t set an icon? And lots of other window managers don’t show these icons, either? Openbox, pekwm, Xfce, fvwm, no icons.

Looks like macOS doesn’t show them, either?!

Has this grown out of fashion? Is this purely a Windows / OS/2 thing?

How to create issues and pull requests in record time on GitHub

Learn how to spin up a GitHub Issue, hand it to Copilot, and get a draft pull request in the same workflow you already know.

The post How to create issues and pull requests in record time on GitHub appeared first on The GitHub Blog. ⌘ Read more

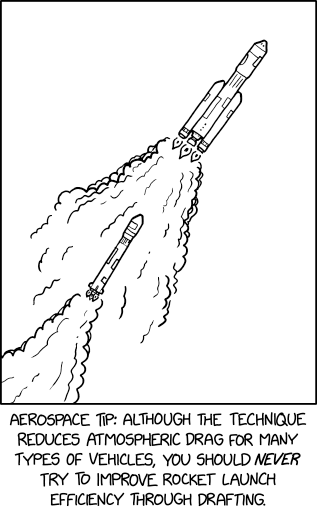

Drafting

⌘ Read more

⌘ Read more

i still want a tux plushie ngl. i’m gonna draft my sister (she can sew) into making me one

First draft of yarnd 0.16 release notes. 📝 – Probably needs some tweaking and fixing, but it’s sounding alright so far 👌 #yarnd

SDL2 ported to Mac OS 9

Well, this you certainly don’t see every day. This is a “rough draft” of SDL2 for MacOS 9, using CodeWarrior Pro 6 and 7. Enough was done to get it building in CW, and the start of a “macosclassic” video driver was created. It DOES seem to basically work, but much still needs to be done. Event handling is just enough to handling Command-Q, there is no audio, etc etc etc. ↫ A cast of thousands The hardest part was a video driver for the classic Mac OS, which had to be created mostly f … ⌘ Read more

@bender@twtxt.net (Feels a bit like his “edit” function could be implemented as “delete and re-draft”, but I’m only guessing here.)

Understanding surrogate pairs: why some Windows filenames can’t be read

Windows was an early adopter of Unicode, and its file APIs use UTF‑16 internally since Windows 2000-used to be UCS-2 in Windows 95 era, when Unicode standard was only a draft on paper, but that’s another topic. Using UTF-16 means that filenames, text strings, and other data are stored as sequences of 16‑bit units. For Windows, a properly formed surrogate pair is perfectly acceptable. However … ⌘ Read more

There’s a reason I avoid speaking my mind on the internet like the plague. The same reason I’d set up a {B,Ph,Gem}log months ago but never got myself to publish any of the drafts in any of them.

[ANN] [CCS] Draft: Monero - Intro Video ‘What is Monero?’

In line with the new website design currently in progress, we’ve created an updated version of the “What is Monero?” video. We’ve kept the original script but made some factual updates. If approved, the plan is to create a light mode version too. Looking forward to feedback/input.

Links:

- https://redirect.invidious.io/watch?v=KkgqcLrNRvw

- [MO report](/vostoemisio-ccs-proposals-animated-videos-fcmps-what-is-monero/ … ⌘ Read more

[ANN] [CCS] Draft: New years resolutions

Link: https://repo.getmonero.org/monero-project/ccs-proposals/-/merge_requests/537

@plowsof:matrix.org ⌘ Read more

I’ve started a draft over at: https://git.mills.io/yarnsocial/twtxt.dev/src/branch/main/exts/webfinger.md

sec-t 2024

[This has been in my draft folder since September. Sorry! It’s been a

few months with ups and downs, mostly downs. Energy to spend time on

blogging has been low. Hell, energy to do much of anything has been

low. I’m trying to clean out the drafts folder and will post more

stuff.]

I attended the security conference sec-t 2024 in Stockholm the other

week. I held a presentation during the Community Event, Wednesday

September 11: “Verifying the Tillitis TKey”.

The TKey uses a novel way of helpin … ⌘ Read more

[ANN] [CCS Draft] NoShore: Groundwork for on-the-go offline payments

Please feel free to review the linked CCS-draft - any workable feedback is welcome!

Link: https://repo.getmonero.org/fullmetalScience/ccs-proposals/-/blob/noshore/fullmetalscience-noshore.md

u/fullmetalScience (monero.town) ⌘ Read more

[ANN] [CCS] Monero FCMP Video Draft

Please see ours and xenu’s video here about FCMP. We think it’s ready to get shared but looking forward to everyone’s feedback before we officially share it in the Social media channels.

Links:

@vostoemisio:matrix.org ⌘ Read more

More thoughts about changes to twtxt (as if we haven’t had enough thoughts):

- There are lots of great ideas here! Is there a benefit to putting them all into one document? Seems to me this could more easily be a bunch of separate efforts that can progress at their own pace:

1a. Better and longer hashes.

1b. New possibly-controversial ideas like edit: and delete: and location-based references as an alternative to hashes.

1c. Best practices, e.g. Content-Type: text/plain; charset=utf-8

1d. Stuff already described at dev.twtxt.net that doesn’t need any changes.

We won’t know what will and won’t work until we try them. So I’m inclined to think of this as a bunch of draft ideas. Maybe later when we’ve seen it play out it could make sense to define a group of recommended twtxt extensions and give them a name.

Another reason for 1 (above) is: I like the current situation where all you need to get started is these two short and simple documents:

https://twtxt.readthedocs.io/en/latest/user/twtxtfile.html

https://twtxt.readthedocs.io/en/latest/user/discoverability.html

and everything else is an extension for anyone interested. (Deprecating non-UTC times seems reasonable to me, though.) Having a big long “twtxt v2” document seems less inviting to people looking for something simple. (@prologic@twtxt.net you mentioned an anonymous comment “you’ve ruined twtxt” and while I don’t completely agree with that commenter’s sentiment, I would feel like twtxt had lost something if it moved away from having a super-simple core.)All that being said, these are just my opinions, and I’m not doing the work of writing software or drafting proposals. Maybe I will at some point, but until then, if you’re actually implementing things, you’re in charge of what you decide to make, and I’m grateful for the work.

This is only first draft quality, but I made some notes on the #twtxt v2 proposal. http://a.9srv.net/b/2024-09-25

@prologic@twtxt.net Thanks for writing that up!

I hope it can remain a living document (or sequence of draft revisions) for a good long time while we figure out how this stuff works in practice.

I am not sure how I feel about all this being done at once, vs. letting conventions arise.

For example, even today I could reply to twt abc1234 with “(#abc1234) Edit: …” and I think all you humans would understand it as an edit to (#abc1234). Maybe eventually it would become a common enough convention that clients would start to support it explicitly.

Similarly we could just start using 11-digit hashes. We should iron out whether it’s sha256 or whatever but there’s no need get all the other stuff right at the same time.

I have similar thoughts about how some users could try out location-based replies in a backward-compatible way (append the replyto: stuff after the legacy (#hash) style).

However I recognize that I’m not the one implementing this stuff, and it’s less work to just have everything determined up front.

Misc comments (I haven’t read the whole thing):

Did you mean to make hashes hexadecimal? You lose 11 bits that way compared to base32. I’d suggest gaining 11 bits with base64 instead.

“Clients MUST preserve the original hash” — do you mean they MUST preserve the original twt?

Thanks for phrasing the bit about deletions so neutrally.

I don’t like the MUST in “Clients MUST follow the chain of reply-to references…”. If someone writes a client as a 40-line shell script that requires the user to piece together the threading themselves, IMO we shouldn’t declare the client non-conforming just because they didn’t get to all the bells and whistles.

Similarly I don’t like the MUST for user agents. For one thing, you might want to fetch a feed without revealing your identty. Also, it raises the bar for a minimal implementation (I’m again thinking again of the 40-line shell script).

For “who follows” lists: why must the long, random tokens be only valid for a limited time? Do you have a scenario in mind where they could leak?

Why can’t feeds be served over HTTP/1.0? Again, thinking about simple software. I recently tried implementing HTTP/1.1 and it wasn’t too bad, but 1.0 would have been slightly simpler.

Why get into the nitty-gritty about caching headers? This seems like generic advice for HTTP servers and clients.

I’m a little sad about other protocols being not recommended.

I don’t know how I feel about including markdown. I don’t mind too much that yarn users emit twts full of markdown, but I’m more of a plain text kind of person. Also it adds to the length. I wonder if putting a separate document would make more sense; that would also help with the length.

OAuth for Browser-Based Apps Working Group Last Call!

The draft specification OAuth for Browser-Based Applications has just entered Working Group Last Call! ⌘ Read more

I just “published” a #draft on my blog about “How I’ve implemented #webmentions for twtxt” (http://darch.dk/mentions-twtxt), so I wanted to know from you guys if you see yourself doing a similar thing with yarnd @prologic@twtxt.net or others with custom setups?

OAuth for Browser-Based Apps Draft 15

After a lot of discussion on the mailing list over the last few months, and after some excellent discussions at the OAuth Security Workshop, we’ve been working on revising the draft to provide clearer guidance and clearer discussion of the threats and consequences of the various architectural patterns in the draft. ⌘ Read more

New Draft of OAuth for Browser-Based Apps (Draft -11)

With the help of a few kind folks, we’ve made some updates to the OAuth 2.0 for Browser-Based Apps draft as discussed during the last IETF meeting in Philadelphia. ⌘ Read more

Maxime Buquet: Versioning

I finally took time to setup a forge and some old drafts turned up. I am

publishing one of them today as is even though it’s 4 years old

(2018-08-07T13:27:43+01:00). I’m not as grumpy as I was at the time but I

still think this applies.

Today I am grumpy at people’s expectation of a free software project, about

versioning and releases. I am mostly concerned about applications rather than

libraries in this article but I am sure some of this would apply to libraries

as well.

Today we were discussing ab … ⌘ Read more

I was just about to write a long response to a discussion I saw online. But while writing it, I realized that I have an opinion, but I can’t express it properly and somehow I don’t have anything to contribute. So I deleted my draft. I don’t have to give my two cents on everything. 😅 ⌘ Read more

China to tighten grip on social media comments, requiring sites to employ sufficient content moderators

The draft regulation demands platforms to employ a content moderation team commensurate with the scale of the services. ⌘ Read more

if your eag profile doesn’t say ‘neartermism’, you’ll automatically be drafted into the deepmind potential hires mailing list

It’ll track a bunch of finger(1) endpoints and let you see what’s new. Very early draft. Not actually a social network, more an anti-social network for ‘80s CompSci transplants. :-)

My website is very Piling. look at the todo list: https://niplav.github.io/todo.html! i can’t tell you much about how it will look like in a year, but i can tell you that it won’t shrink. it’s piling. everything is piling up, forgotten drafts, half-finished experiments, buggy code—fixed over time, sure, but much more slowly than the errors come rolling in. it’s an eternal struggle.